Dapper

The Distributed and Parallel Program Execution Runtime

Why Dapper?

We live in interesting times, where breakthroughs in the sciences increasingly depend on the growing availability and abundance of commoditized, networked computational resources. With the help of the cloud or grid, computations that would otherwise run for days on a single desktop machine now have distributed and/or parallel formulations that can churn through, in a matter of hours, input sets ten times as large on a hundred machines. As alluring as the idea of strength in numbers may be, having just physical hardware is not enough -- a programmer has to craft the actual computation that will run on it. Consequently, the high value placed on human effort and creativity necessitates a programming environment that enables, and even encourages, succinct expression of distributed computations, and yet at the same time does not sacrifice generality.

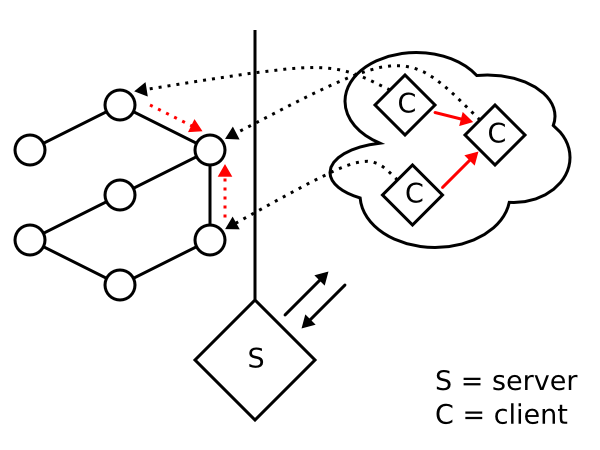

Dapper, standing for Distributed and Parallel Program Execution Runtime, is one such tool for bridging the scientist/programmer's high level specifications that capture the essence of a program, with the low level mechanisms that reflect the unsavory realities of distributed and parallel computing. Under its dataflow-oriented approach, Dapper enables users to code locally in Java and execute globally on the cloud or grid. The user first writes codelets, or small snippets of code that perform simple tasks and do not, in themselves, constitute a complete program. Afterwards, he or she specifies how those codelets, seen as vertices in the dataflow, transmit data to each other via edge relations. The resulting directed acyclic dataflow graph is a complete program interpretable by the Dapper server, which, upon being contacted by long-lived worker clients, can coordinate a distributed execution.

Under the Dapper model, the user no longer needs to worry about traditionally ad-hoc aspects of managing the cloud or grid, which include handling data interconnects and dependencies, recovering from errors, distributing code, and starting jobs. Perhaps more importantly, it provides an entire Java-based toolchain and runtime for framing nearly all coarse-grained distributed computations in a consistent format that allows for rapid deployment and easy conveyance to other researchers.

Features

To offer prospective users a glimpse of the system's capabilities, we quickly summarize many of Dapper's features that improve upon existing systems, or are new altogether:

- A code distribution system that allows the Dapper server to transmit requisite program code over the network and have clients dynamically load it. A consequence of this is that, barring external executables, updates to Dapper programs need only happen on the server-side.

- A powerful subflow embedding method for dynamically modifying the dataflow graph at runtime.

- A runtime in vanilla Java, a language that many are no doubt familiar with. Aside from the requirement of a recent JVM and optionally Graphviz Dot, Dapper is self-contained.

- A robust control protocol. The Dapper server expects any number of clients to fail, at any time, and has customizable re-execution and timeout policies to cope. Consequently, one can start and stop (long-lived) clients without fear of putting the entire system into an inconsistent state.

- Flexible semantics that allow data transfers via files or TCP streams.

- Interoperability with firewalls. Since your local cloud or grid probably sits behind a firewall, we have devised special semantics for streaming data transfers.

- Liberal licensing terms. Dapper is released under the New BSD License to prevent contamination of your codebase.

- Operation as an embedded application. A user manual describes the programming API that users can follow to run the Dapper server inside an application like Apache Tomcat.

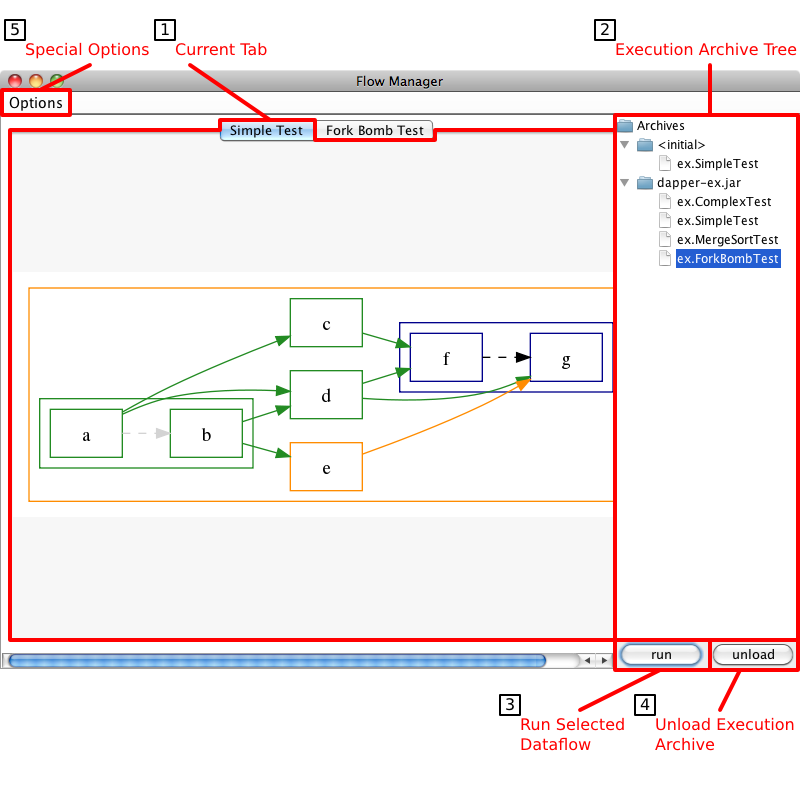

- Operation as a standalone user interface. With it, one can run off-the-shelf demos and learn core concepts from visual examples. By following a minimal set of conventions, one can then bundle one's own Dapper programs as execution archives, and then get realtime dataflow status and debugging feedback.

Links

If Dapper does not work for you, be sure to check out these other distributed computing tools:

- Dryad -- Microsoft's

.NET-based answer to Google infrastructure. Substantively the closest system to Dapper. - MapReduce and Hadoop -- Google's internal computing engine and Apache's open source rendition of it.

- Taverna -- In case you're a bioinformatician looking for something that takes advantage of existing biological data processing pipelines, and not building your own.

- Data Intensive Supercomputing -- Randy Bryant's argument for why the next computing frontier lies with Google/Yahoo/Microsoft-scale data centers, and why academicians should get involved.

- Paralex -- A distributed programming model that was far ahead of its time.

Downloads and Documentation

Here's how to obtain Dapper and/or learn more about it:

- Downloads of source and Jar distributions.

- A user manual detailing the rationale behind Dapper, ready-to-use demos, and the programming API.

- Javadocs of member classes, or, for the eternally curious, Doxygen of the native components.

- A Git repository of browseable code.

To get up and running quickly, download the two Jars dapper.jar and dapper-ex.jar (modulo some version number x.xx).

You will need to have Graphviz Dot and Java 1.6.*+ handy.

Start the user interface with the command

java -jar dapper.jar,

or, if your operating system associates the .jar extension with a JRE, by clicking on the icon.

Drag and drop the Jar of examples, dapper-ex.jar, into the box containing the "Archives" tree.

You will see a few selections; select the one that says "ex.SimpleTest", and then press the "run" button.

Now start at least four worker clients by repeatedly issuing the command

java -cp dapper.jar org.dapper.client.ClientDriver.

By now, you should see the user interface begin to step through the "Simple Test" computation. Although everything is happening on the local machine, the Dapper server embedded in the user interface is completely agnostic to the actual disposition of clients. Thus, fully distributed operation is intrinsically no harder than the steps laid out above.

Alternatively, all of the above can be accomplished by downloading the dapper-src.tgz distribution and running the test.py script (Unix-based) or buildandtest.exe executable (Windows) from the Dapper base directory.

Finally, Eclipse users can import the source distribution or version control working image, which contain .project and .classpath files, directly.

Note that the IvyDE plugin is required to properly set up the class path.

If you think Dapper is a promising solution to your distributed computing needs, have a look at the user manual for a much more in-depth tour. Also, consider downloading the full, Tar'd distribution and building the Java sources.

Do not hesitate to contact the administrator if you have any lingering doubts and/or questions.